GaMeS: Mesh-Based Adapting and Modification of Gaussian Splatting

This is my notes on the paper GaMeS: Mesh-Based Adapting and Modification of Gaussian Splatting.

This is not meant to be a review exhaustively covering the algorithm and implementation details. Rather, this is a subjective take on the amazing paper, with my own takeaways included at the end. I haven’t gottent to adding the references properly. Do excuse me for that!

Table of Contents

Introduction

While previous works like SuGaR aligned gaussians to meshes, they didn’t “truly” combine them. GaMes presents a way to directly connect meshes and gaussian splats. This makes it possible to modify the Gaussians by simply modifying the mesh. This also in turn allows for real-time modification and fast rendering, i.e. for dynamic scenes and animations. Personally, I think the paper isn’t very difficult, rather I struggled with the intuition behind it. So I’m going to first discuss that, along with some basic terminology.

Intuition

1. SuGaR vs GaMeS

In the paper’s introduction section, GaMeS presents a comparison to SuGaR. To avoid confusion, let’s first clear this up. The two papers serve different purposes entirely.

-

The purpose of SuGaR is to derive a high-quality mesh from a 3DGS model. In order to do this, it adds regularization terms to align the gaussians to the surface of the scene, so that a smooth mesh can be extracted.

-

The goal of GaMeS is not to create a perfect mesh. Rather it gives a controllable structure to the gaussians by connecting them with meshes. This is what allows for editing and modification. GaMeS can do this with or without a mesh to begin with. For the case when a mesh isn’t available, GaMeS estimates a mesh from the Gaussian Splats. While this estimated mesh (called the pseudo-mesh) doesn’t perfectly capture the object’s surface, it provides enough structure to enable editing and modification of the Gaussians.

2. If Gaussian Splats were so useful, why didn’t we just use them before with meshes? I mean, in case of SuGaR, extracting meshes as nicely isn’t something we had before. But here, we’ve already had meshes for a long time.

Yes, meshes have been around for a long time. They offer explicit structures, making it easy to modify an object. This makes them very versatile for 3D modeling, animation, and simulations.

Gaussian splats, on the other hand, represent a scene or object as a collection of ellipsoids. They are great for smooth and fast rendering (as presented in the seminal 3DGS paper). However, they lack the explicit geometric structure of a mesh. A scene can have an arbitrary number of Gaussians, and editing hundreds of thousands of them manually is impractical.

GaMeS addresses this by binding Gaussians to the mesh. Gaussian Splatting has blown up in popularity in recent times, and GaMes introduces a way to combine meshes and Gaussians to get the best of both worlds.

3. So?

Meshes are controllable. Gaussians provide explicit volumetric properties. GaMeS bridges the gap.

Oh and also, as a result of this sweet combination, GaMeS allows for real-time modifications. 🔥

Theory

I would like to address two key ideas here. Mesh Parameterization of Gaussians and Pseudo-Mesh Initialization and Distribution. Before that, let’s briefly go over how the whole thing works.

The GS technique captures a 3D scene through an ensemble of 3D Gaussians. GaMeS aims to parameterize the Gaussian components using vertices from the mesh face \(V\). This means that for each triangle face of the mesh, GaMeS defines one or more Gaussians whose position and shape are determined by the geometry of the triangle. If we have an existing mesh, GaMeS uses this to place Gaussians on each triangle face. When there’s no mesh provided, GaMeS can estimate a pseudo-mesh by training the Gaussians, or use methods like FLAME to create the mesh. During training, GaMeS then optimizes these gaussians on the meshes using the connecting parameters. Afterwards, when we edit the mesh, the shape of the gaussians adapt automatically, since their mean and covariance are directly derived using the mesh vertices.

Now, let’s look at the math in details.

a. Gaussian Parameterization on Mesh Faces

Each 3D Gaussian in GS is defined by its position (mean), covariance matrix (rotation and scale), opacity and color (spherical harmonics).

Each triangle face in the mesh has three vertices: \(v_1, v_2, v_3\). The Gaussians associated with the face are defined using these vertices.

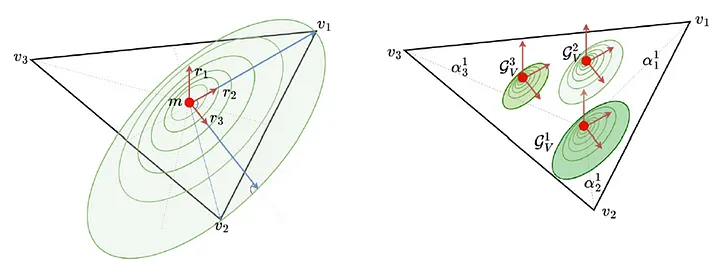

In the figure above, the black triangle represents a given mesh surface, with \(v_1, v_2, v_3\) being the three vertices of the mesh. Specifically, we want to determine the shape (i.e. the the mean and the covariance matrix) of appropriate Gaussians using this mesh.

Mean of the Gaussian (Position):

GaMeS expresses the mean of a gaussian as a convex combination of vertices \(v_1, v_2, v_3\). This is done using barycentric coordinates.

\[m_V(\alpha_1, \alpha_2, \alpha_3) = \alpha_1 v_1 + \alpha_2 v_2 + \alpha_3 v_3\]where \(\alpha_1 + \alpha_2 + \alpha_3 = 1\), and \(\alpha_1, \alpha_2, \alpha_3\) are trainable parameters. The barycentric weights adjust the relative influence of each vertex over the Gaussian’s position.

Covariance Matrix (Rotation and Scale)

The covariance matrix in 3DGS is factorized as:

\[\Sigma = R^T S S R\]Now, the new rotation matrix \(R_v\) is built using orthonormal vectors \(r_1, r_2, r_3\).

- \(r_1\) is defined by the normal vector to the triangle.

- \(r_2\) is is a unit vector that points from the center of the triangle to vertex \(v_1\).

- Now, its trivial that \(r_1\) and \(r_2\) are orthogonal to each other. We want \(r_3\) to be such that, 1) \(R\) is orthonormal, and 2) \(r_2\) and \(r_3\) together span the entire 2D space of the triangle’s surface. This is where the Gram-Schmidt process comes in. To get \(r_3\), we orthonormalize the vector from the center to \(v_2\) of the triangle w.r.t. \(r_1\) and \(r_2\): \(r_3 = \frac{\text{orth}(v_2 - m; r_1, r_2)}{\|\text{orth}(v_2 - m; r_1, r_2)\|}\)

Thus, we get the vertex-dependent rotation matrix \(R_v = [r_1, r_2, r_3]\).

Next, the scaling matrix \(S_v\) = \(\text{diag}(s_1, s_2, s_3)\) correspond to the size of the gaussians along the \(r_1, r_2, r_3\) axes.

- \(s_1\) is the scaling along the normal vector. So to keep the gaussian flat, it is set to a very small constant.

- \(s_2\) scales the Gaussian along the direction r2, i.e. from centroid m to vertex v1.

- \(s_3\) scales the Gaussian along the direction r3. The dot product here projects v2 onto the direction r3.

The blue lines on the left side of the figure above represent \(s_2\) and \(s_3\).

And so finally, we have the covariance matrix that is completely derived from the triangle vertices.

\[\Sigma_V = R^T_v S_v S_v R_v\]Tying it All Together

Its not just one Gaussian per triangle. We use \(k\) Gaussians (right side of the figure above) for each face, resulting in:

\[G_V = \{N(m_V(\alpha_1^i, \alpha_2^i, \alpha_3^i), \rho^i \Sigma_V)\}_{i=1}^k\]As we can see, along with the \(\alpha\) parameters, the paper introduces a trainable shape parameter \(p\). These values, together with opacity \(\sigma\) and color \(c\), are tuned during training. Thus, GaMeS uses a dense set \(\mathcal{G}_M\) of 3D Gaussians as:

\[\mathcal{G}_M = \bigcup_{V_i \in \mathcal{F}_M} \{ G_{V_i}, \sigma_{i,j}, c_{i,j} \}_{j=1}^{k}\]This ensures that the Gaussians are positioned, oriented, and shaped according to the triangular mesh. The paper uses \(k=3\) and there are some nuances to it, like subdividing larger faces. I talk about that in the takeaway section.

b. Pseudo-Mesh Initialization and Distribution:

When a mesh isn’t available, GaMeS creates a mesh using Gaussians. Its called a pseudo-mesh because the set of triangle faces are not connected. This pseudo-mesh initialization is done by:

- First, training a GS model with flat Gaussians (without a mesh).

- Second, for each Gaussian, creating a triangle. The triangles are derived using the Gaussians’ mean \(m\), rotation \(R\) and scaling \(S\).

- Finally, freezing the triangle vertices and reparameterizing the Gaussians. For each face \(V\), the corresponding Gaussian component is defined using formulas similar to the ones in Gaussian Parameterization, discussed before.

This generates a simple but controllable pseudo-mesh to start modifying and transforming the Gaussians. The authors state that facilitating the generation of an initial mesh is not the primary focus here. They also present results using an alternate mesh initialization method, namely FLAME.

My Takeaway

-

I think the potential of GaMeS is particularly apparent in the reconstruction and generation of facial expressions. The alterations are not limited to streching or bending. In figure 16 of the paper, I was genuinely impressed to see the clean realistic results after selectively disregarding specific sections like tree branches or leaves. Since Gaussians represent color from two sides, we can still see the inner side of the pot after removing the soil.

-

The authors mention splitting large faces into smaller parts. They do this to somewhat adjust the areas each Gaussian component should represent. I believe this is similar to the Adaptive Density Control in the vanilla 3DGS paper. The authors also add that how to adapt Gaussians when mesh faces are split is not apparent.

-

The above point ties together with another limitation, that is: using a fixed number of Gaussians per face. The authors point out that in case of models with various face sizes, finding the ideal parameter here is not trivial. I believe there’s scope to produce even better results with a more adaptive approach, which can potentially address both these issues.

-

I think the Gaussian parameterization proposed in the paper is solid! Maybe I’m being too pedantic about meshes since reading SuGaR, but I think the pseudo-mesh generation and reparameterization can be improved. The paper also mentions optionally updating the meshes during training. I suppose improving the mesh representation would be a very interesting avenue to explore. For data without meshes, one strategy that comes to mind is: Start optimizing Gaussians like SuGaR -> Generate pseudo-mesh faces from the well-aligned Gaussians -> Use mesh simplification to refine the triangles -> Reparameterize Gaussians on this mesh -> Continue further training and tuning.

this concludes my notes on the paper GaMeS: Mesh-Based Adapting and Modification of Gaussian Splatting. thank you for reading!